The state of AI regulation in 2024

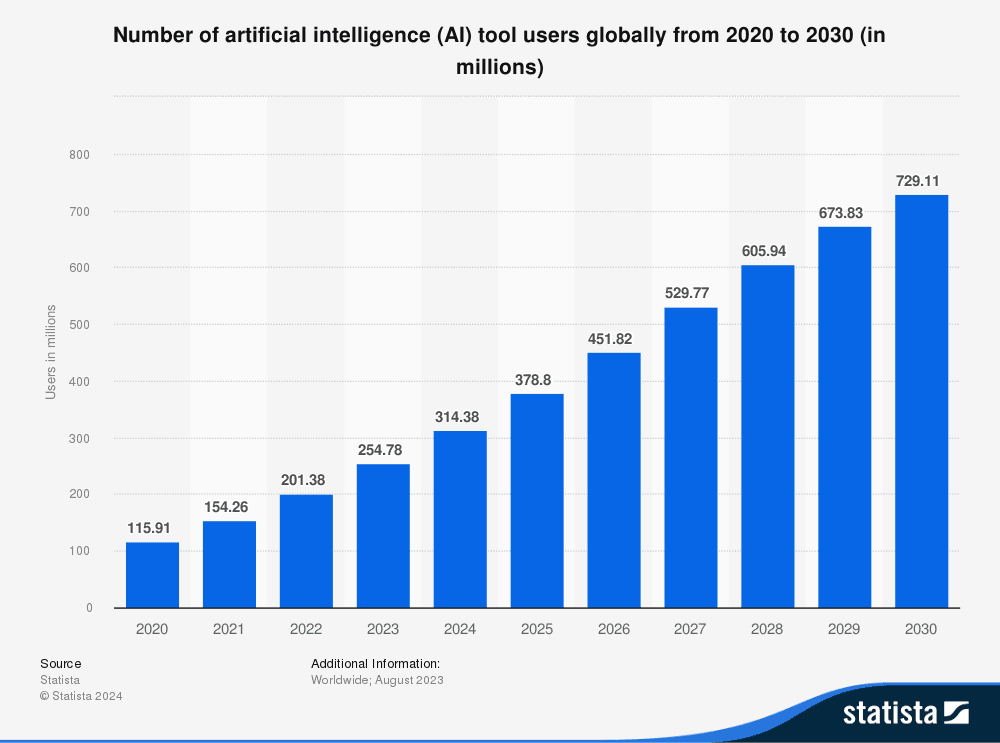

In the last 4 years, the use of generative AI technology has exploded. Users can now generate text, images, audio, video through their browser, all from a simple prompt. Indeed, estimates suggest that the number of AI tool users will increase from 314 million in 2024 to 729 million in 2030 (Figure 1).

Figure 1: Forecast of AI tool users globally

But this has also led to an increasing concern regarding the use of generative AI technologies. In a 2024 survey of 16,795 respondents by Microsoft (Global Online Safety Survey 2024):

71% responded that they were very worried about scams generated with AI;

69% responded that they were very worried about deepfakes generated with AI;

66% responded that they were very worried about AI hallucinations; and

62% responded that they were very worried about data privacy.

As governments seek to combat cybercriminals and misinformation, regulation over the development, use and distribution of generative AI technologies is increasing. Governments are seeking to strike the right balance between innovation and regulation, for example:

The EU has enacted the world’s first AI-specific legislation (the EU AI Act) to regulate the development and distribution of AI systems, taking a risk-based approach to its regulation (similar to the GDPR).

Canada has proposed the Artificial Intelligence and Data Act (AIDA) to regulate high-risk AI (which has not yet been enacted).

Brazil has introduced Bill 2338/2023 (the Brazil AI Bill) (which has not yet been enacted).

India is seeking to amend its Digital India Act with a renewed focus on the regulation of high-risk AI applications.

In this post, we’ll explore 3 key insights into the current state of AI regulation. We’ll then discuss the relevance for businesses that develop or deploy AI into their operations.

Insight 1: Data protection and other legislation still applies to the use of AI

The use of AI technology is still subject to existing regulatory regimes covering data protection, consumer protection, and businesses. For example, the EU AI Act explicitly states that it should be ‘without prejudice to existing Union law, in particular on data protection, consumer protection …’.

In those circumstances, businesses still need to ensure their operations align with the requirements outlined in regimes like:

Data Protection Regimes: The GDPR (EU/UK), Australian Privacy Law (Australia), the CCPA (USA);

ePrivacy Regimes: ePrivacy regulations (EU/UK), the CAN-SPAM Act (USA), the Spam Act (Australia); and

Consumer Protection Regimes: Competition and Consumer Act 2010 (Australia), Consumer Rights Act 2015 (UK).

The use of new or innovative technologies like AI does not exempt a business from any applicable regulations. Indeed, many countries have continue to rely on their existing legislation to regulate the use of AI, including Australia, the USA, and the UK.

Insight 2: The EU has enacted a world-first regulatory regime that is specific to AI technologies

Nevertheless, the EU has enacted a world-first regulatory regime called the EU AI Act (Regulation (EU) 2024/1689) which is complementary to existing regulations. This imposes requirements on developers, providers and deployers of AI systems, based on the risk-level posed by the AI system.

Applying to any use of AI within the EU (including where the output produced by an AI system is used in the EU, even when the provider or developer of that system is outside the EU: Article 2(1)(c)), the EU AI Act splits AI systems into 4 categories:

Prohibited

An exhaustive list of AI practices that are banned under Article 5. This generally includes:

Deceptive AI that causes harm: AI systems that use manipulative or deceptive techniques to cause users to take make decisions that can cause harm;

Exploitative AI: AI systems that exploit any vulnerabilities of people due to age, disability, social or economic situation to cause users to make decisions that can cause harm;

Social Scoring AI: AI systems that evaluate or classify persons based on their social behaviour to treat them detrimentally or unfavourably;

Criminal prediction AI: AI systems that assess or predict the risk of a person committing a criminal offence, based solely on profile, personality traits or characteristics

Facial scraping: AI systems that scrape the internet or CCTV footage to create or expand facial recognition databases

Emotional Inference AI: AI systems that infer emotions of a person in a workplace or educational institution (except where used for medical or safety reasons)

Sensitive Inference AI: AI systems that infer sensitive information about a person (eg, sex life, political beliefs, trade union membership etc.)

High Risk

AI systems that are covered by certain EU product safety legislation (outlined in Annex I) or fall into one of the following areas (outlined in Annex III):

Biometrics

Critical infrastructure

Educational and vocational training (eg, to evaluate learning outcomes, determine admission, or assess appropriate level of education)

Employment (eg, to evaluate candidates, to monitor / evaluate performance of work)

Access to essential private or public services

Law enforcement

Migration, asylum and border control management

Administration of justice and democratic processes.

General Purpose

An AI model that displays ‘significant generality and is capable of competently performing a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications’: Article 3. Greater regulations apply to general purpose AI models with a ‘systemic risk’, that is, potential to have significant impact on the EU, public health, safety or society, that can be propagated at scale across the value chain.

Other

Any other AI systems that do not fall into one of the above criteria. This includes AI systems that fall into one of the ‘high-risk’ areas but does not pose a significant risk of harm, including where any of the following is fulfilled (unless the AI system is profiling natural persons):

The AI System is intended to perform a narrow procedural task

The AI system is intended to improve the results of a previously completed human activity

The AI system is not intended to replace or influence a previously completed human assessment, without proper human review

The AI system is intended to perform a preparatory task to an assessment done by humans.

When do requirements come into effect?

Under the EU AI Act, requirements applicable to each type of AI system vary:

Prohibited AI systems will be prohibited in the EU from 2 February 2025.

Developers and providers of high-risk AI systems will need to meet strict regulatory requirements around risk management, data management, cybersecurity, record-keeping (including logs), post-market monitoring, registration, and reporting requirements from 2 August 2027.

General Purpose AI systems will need to meet their own requirements under Chapter V from 2 August 2025.

Other AI systems will need to meet transparency requirements by 2 August 2026.

Insight 3: There are significant parallels between the enactment of data protection regimes and AI-specific regulatory regimes

The EU AI Act shares similarities to the GDPR in its risk-based approach to regulation:

Each Member State will have their own designated national regulator for the EU AI Act.

Regulatory requirements imposed under the EU AI Act correlate to the risk posed by the AI system to persons.

Most of the regulation is also drafted generally, meaning that the EU AI Act preserves flexibility in light of the rapid advancement of AI technology.

However, this preservation of flexibility also comes at the cost of clarity for developers and users of AI models. Similar to how the GDPR does not explicitly identify what technical controls are required to adequately protect data under law (instead only requiring data controllers to implement controls commensurate to the risk), the EU AI Act is similarly light on the specifics of what is required under EU law.

In those circumstances, businesses and organisations will need further guidance from national regulators to adequately interpret and apply the provisions of the EU AI Act.

What this means: Regulation over AI technology is likely to increase

Nevertheless, if there is anything we can learn from the implementation of the GDPR – it is probably that regulation over AI technology is likely to increase with the enactment of the EU AI Act.

Already, governments across the globe are scrutinising this space to combat increasing cyber and misinformation risk. The EU AI Act is the first model upon which governments and businesses can determine how to safely use AI to reduce the risk imposed upon people and society.

While many of the provisions of the EU AI Act only come into force in 2026-2027 (with the exception of the prohibitions which come into force in August 2025), it is likely a helpful framework upon which businesses can assess the risks posed by their use of AI and obtain an early indication of how a regulator may assess their use of AI.

How should I get started?

In particular, a helpful starting point for businesses would be:

Assess whether your AI technology would be prohibited under EU law;

Assess whether your AI technology would be considered ‘high-risk’ under EU law; and

If your AI technology is meant for use by people, ensure that your technology clearly discloses that the outputs are from an AI (rather than from a human).